tensorflow - Understanding states of a bidirectional LSTM in a seq2seq model (tf keras) - Stack Overflow

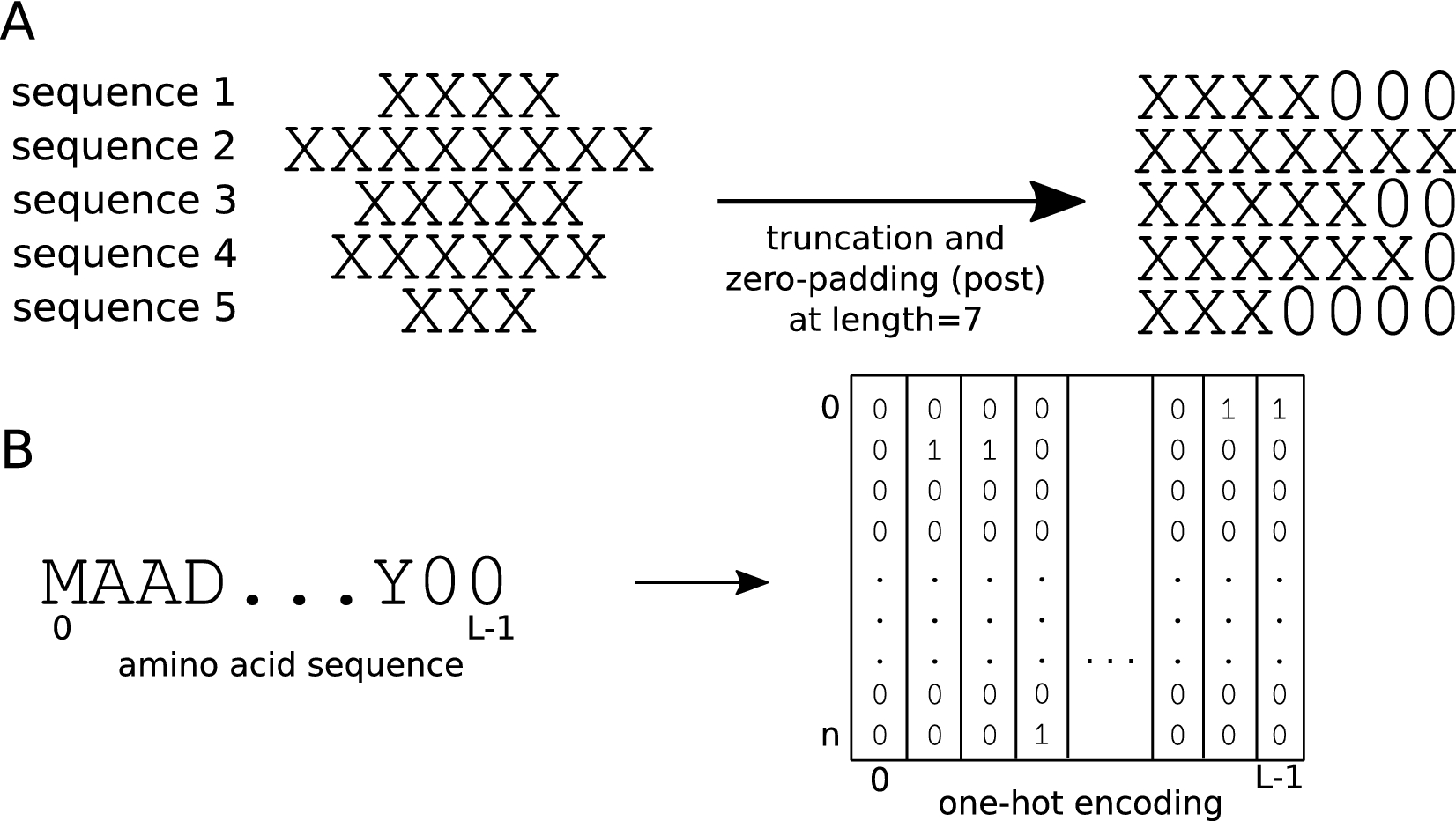

Effect of sequence padding on the performance of deep learning models in archaeal protein functional prediction | Scientific Reports

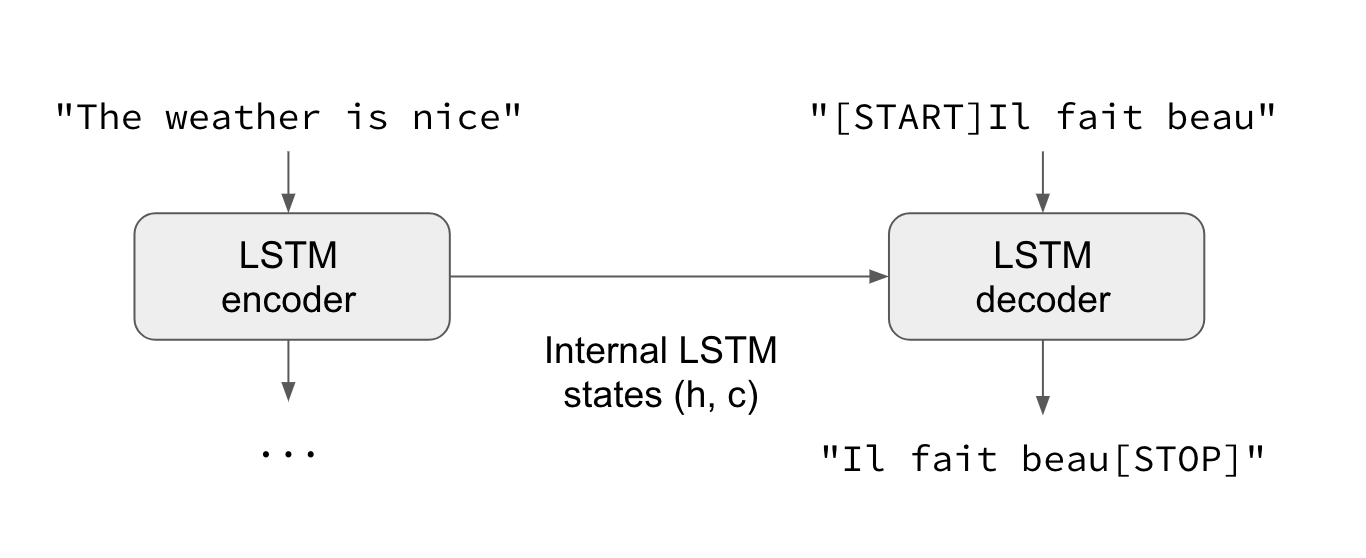

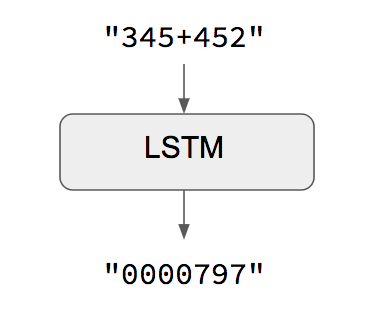

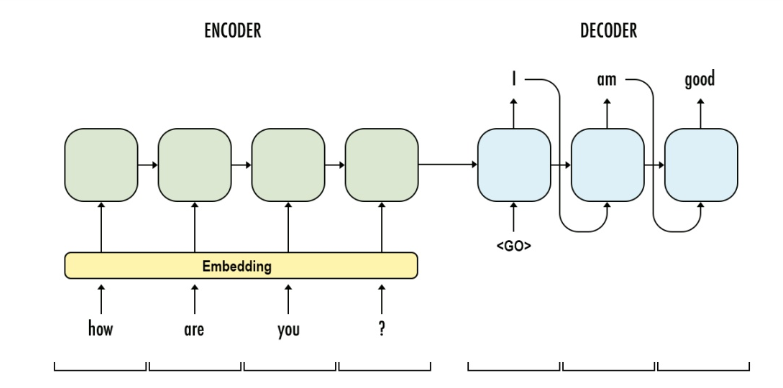

GitHub - philipperemy/keras-seq2seq-example: Toy Keras implementation of a seq2seq model with examples.

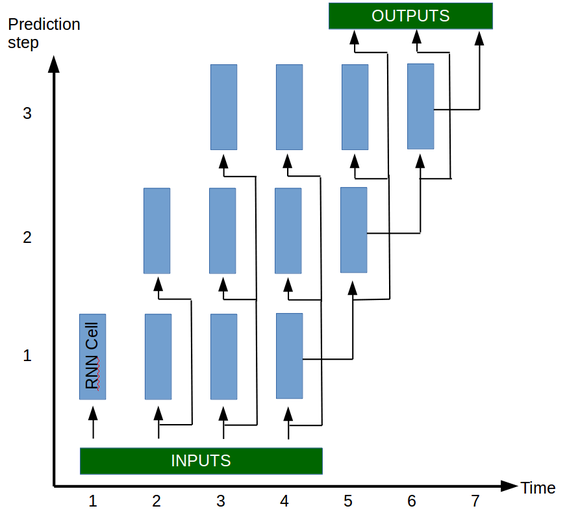

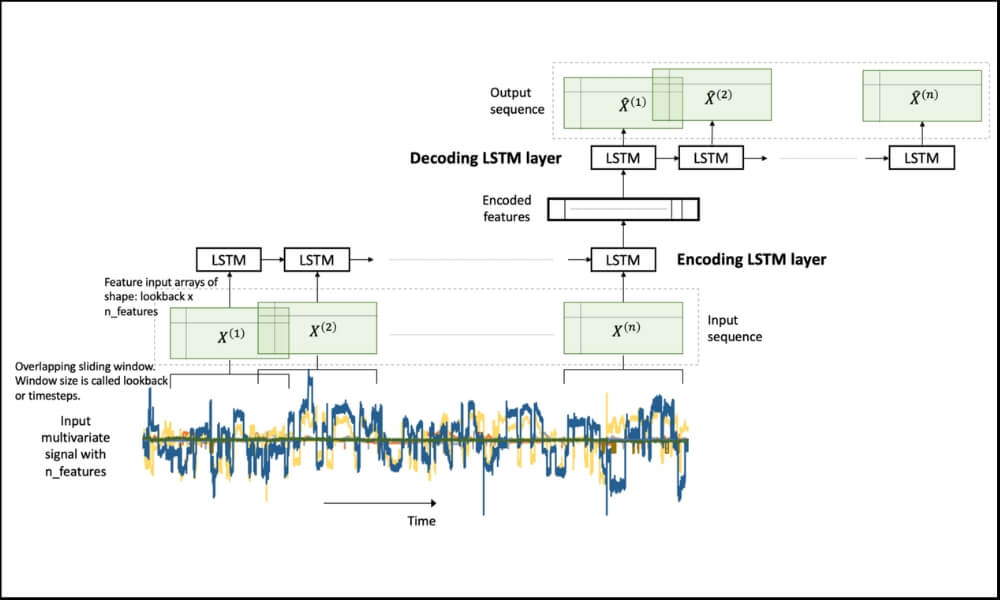

Keras implementation of an encoder-decoder for time series prediction using architecture - Away with ideas

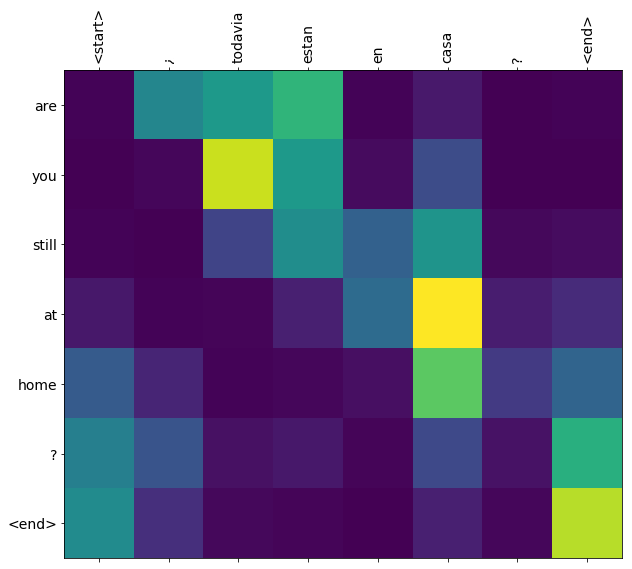

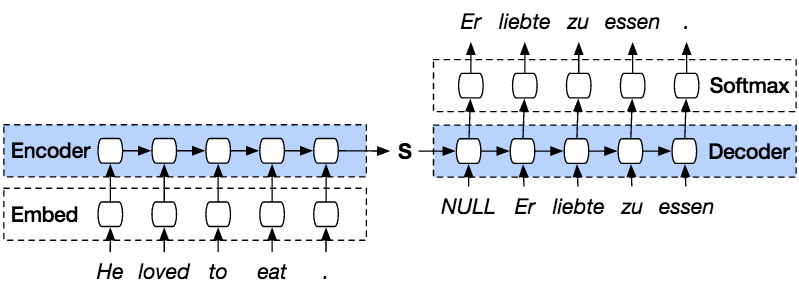

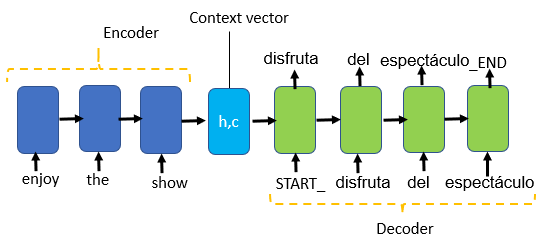

10.7. Encoder-Decoder Seq2Seq for Machine Translation — Dive into Deep Learning 1.0.0-beta0 documentation

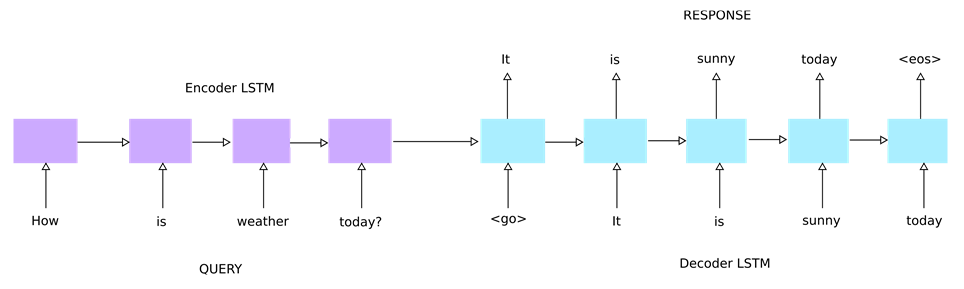

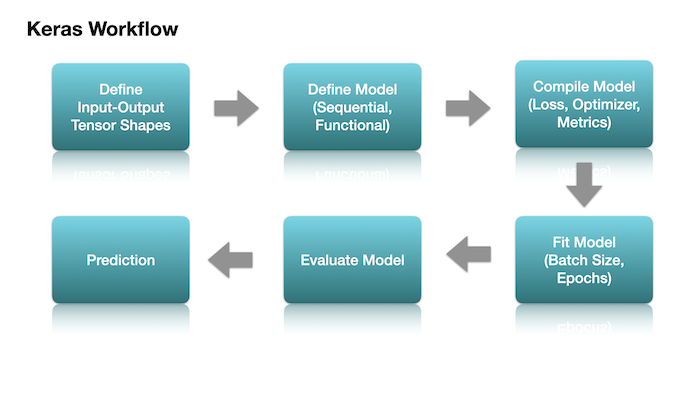

![[2022] What Is Sequence-to-Sequence Keras Learning and How To Perform It Effectively | Proxet [2022] What Is Sequence-to-Sequence Keras Learning and How To Perform It Effectively | Proxet](https://proxet.com/wp-content/uploads/2022/01/83_cover.png)

![2022] What Is Sequence-to-Sequence Keras Learning and How To Perform It Effectively | Proxet 2022] What Is Sequence-to-Sequence Keras Learning and How To Perform It Effectively | Proxet](http://proxet.com/wp-content/uploads/2022/01/83_content_1.png)