Mutual-learning sequence-level knowledge distillation for automatic speech recognition - ScienceDirect

Mutual-learning sequence-level knowledge distillation for automatic speech recognition - ScienceDirect

Structure-Level Knowledge Distillation For Multilingual Sequence Labeling: Paper and Code - CatalyzeX

GitHub - Alibaba-NLP/MultilangStructureKD: [ACL 2020] Structure-Level Knowledge Distillation For Multilingual Sequence Labeling

![PDF] Sequence-Level Knowledge Distillation | Semantic Scholar PDF] Sequence-Level Knowledge Distillation | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/32a93598e8a338496f04a0ace81b0768c2ef059d/8-Table1-1.png)

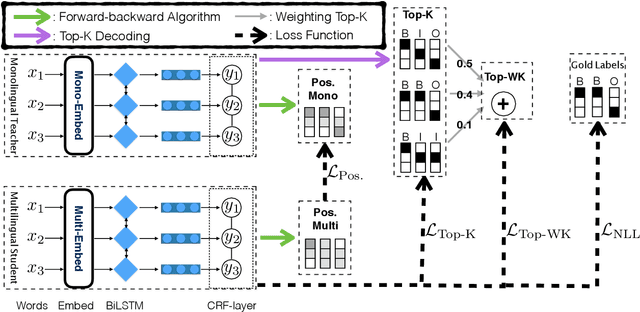

![PDF] Structure-Level Knowledge Distillation For Multilingual Sequence Labeling | Semantic Scholar PDF] Structure-Level Knowledge Distillation For Multilingual Sequence Labeling | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/82eb7ed876778df5114e92ba8156444ec83461f5/3-Figure1-1.png)

![PDF] Sequence-Level Knowledge Distillation | Semantic Scholar PDF] Sequence-Level Knowledge Distillation | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/32a93598e8a338496f04a0ace81b0768c2ef059d/6-Figure2-1.png)

![Knowledge distillation in deep learning and its applications [PeerJ] Knowledge distillation in deep learning and its applications [PeerJ]](https://dfzljdn9uc3pi.cloudfront.net/2021/cs-474/1/fig-4-full.png)

![PDF] Sequence-Level Knowledge Distillation | Semantic Scholar PDF] Sequence-Level Knowledge Distillation | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/32a93598e8a338496f04a0ace81b0768c2ef059d/4-Figure1-1.png)

![Knowledge distillation in deep learning and its applications [PeerJ] Knowledge distillation in deep learning and its applications [PeerJ]](https://dfzljdn9uc3pi.cloudfront.net/2021/cs-474/1/fig-1-full.png)